Takeaways from CES 2024 for video service providers

The annual Consumer Electronics Show (CES 2024) always grabs global headlines with eye-catching new gadgets and ever-expanding TV screen sizes. This year was no different, with acres of coverage for LG and Samsung’s new transparent TV screens, the latest virtual reality concepts, and robots that will make you anything from a cocktail to a stir-fry.

But, like the bright lights and razzmatazz of the host city, Las Vegas, the show-floor glitz of CES 2024 is largely a temporary distraction for the video entertainment industry. Behind the scenes, there are serious conversations about the future of streaming. So what were video service providers really talking about at CES 2024?

Magine Pro’s Sales Director, Neil Fender, at CES 2024.

Interest in AI is becoming more targeted

Just as we saw at IBC and NAB in 2023, Artificial Intelligence (AI) continued to be a major buzzword. Our CEO, Matthew Wilkinson, touched on this important topic in his recent blog on Large Language Models and Generative AI. But the partners and customers (both current and future) that I spoke to in Las Vegas are increasingly focused on the practicalities of deploying this constantly evolving technology. They’ve gone beyond wondering how AI might revolutionise the industry in the future, to looking at very specific use cases that will advance their short-term goals, not just their longer-term planning.

With consumers around the world becoming increasingly cost-conscious, churn is a massive area of focus for every streaming service, no matter what combination of business models they’re using. The video services I spoke to are looking for a strong and immediate return on investment for any foray into AI. In particular, they want it to keep consumers engaged so they’re less likely to look elsewhere for content. The benefits of offering personalized content recommendations are the most often-cited strategy for growing engagement, so it was a pleasure to be able to talk about the AI-powered recommendation capabilities in Magine Pro’s end-to-end OTT streaming platform .

I was also happy to talk through some of the tactics that have paid dividends for our existing customers when tackling churn. For example, the “dunning” functionality in our advanced billing engine enables them to systematically communicate with consumers whose payment methods have expired or failed. This proactive strategy prevents passive involuntary churn, minimising customer loss and ensuring a positive user experience. Another proactive approach is to automate “win-back” strategies. This means offering targeted discounts to certain users who have recently churned, or shown signs of being a flight-risk, to help keep them on-board.

We’ll have more about these tactics in our upcoming e-guide on advanced OTT monetization strategies. Register now to be among the first to get the guide, which will also explain some of the reasons we champion hybrid monetization models.

No moving out of the FAST-lane

I don’t think anyone was surprised to find that FAST – another of the biggest streaming trends of 2023 – was still a hot topic at CES. The low barriers to entry mean that FAST channels continue to be the most popular route into the streaming market for content owners, particularly in the US market.

The FAST trend I heard most about at CES, however, was those who’ve dipped a toe into streaming with FAST channels via aggregation platforms like Roku, Amazon, LG, and Samsung and now want their own suite of streaming apps to build a direct relationship with consumers. We had some excellent conversations at CES with technology partners and customers about how we can help them make that ambition a reality with the Magine Pro OTT platform because it’s quick and easy to launch, but also customizable so it can grow with their business.

Want to know more?

If you weren’t able to make it to CES 2024, you can book a meeting with our team to talk about how AI recommendations could improve your engagement, or how Magine Pro can elevate your content distribution and monetization strategies.

Revolutionise your content delivery with AI-based recommendations

OTT streaming platforms offer an extensive range of content to their users. However, people often find it challenging to choose from numerous options, and may become quickly overwhelmed, resulting in poor choices or no decision at all.

According to consumer research at Netflix [1], a user typically loses interest in selecting content after browsing for just 60 to 90 seconds and reviewing 10 to 20 titles. If the user cannot find anything of interest, there is a substantial risk of them discontinuing the service.

Recommendation systems provide a solution by offering a personalised experience to users that make them feel as if the streaming service was created exclusively for them, which enhances organic engagement.

Where we started

During our last Hack Days at Magine Pro, we challenged ourselves to create a recommendation service for the platform. We brought together a cross-functional team of engineers from the Backend, Infrastructure, and Media teams to tackle this problem from different domain perspectives.

The aim was to create an end-to-end real-time recommendation system for a streaming service. Modern recommendation machine learning algorithms use datasets of users, items, and their interactions as inputs. This data has to be streamed, cleaned, transformed, and inserted into the model for the training.

Using modular programming, a technique of breaking down a product into smaller, self-contained units that can be developed and tested independently, we built separate components and went through all stages of the recommendation system creation process.

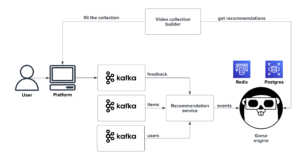

As a first proof of concept, we brought together the following services:

- Mux Analytics is used to examine clickstream data and get insights into video engagement and has an integration with AWS, our main cloud provider

- AWS Kinesis Data Streams provides the ability to stream large amounts of data in real-time

- AWS Lambda can be used to perform extract, transform, and load (ETL) jobs on the protobuf-encoded data we get from Mux

- AWS Personalize is a self-contained AI service that takes input data according to the schema and generates various types of recommendations

That worked like a charm! As a next step, our team tried to reduce the costs since using MUX/AWS integration and AWS Personalize was expensive. We also wanted to experiment with different recommendation engines to find the best suitable for our needs.

Minimising the costs

The team started to think about how we can make this feature less expensive for Magine Pro and our partners.

Here are the steps we performed to decrease the costs:

- Replaced the Mux Analytics module with the Apache Kafka, a distributed streaming platform, running in our AWS infrastructure setup

- Created a separate backend service for the ETL jobs, which listened to the Kafka topics, modified and enriched them with additional information, and provided the resulting data further

- Integrated Gorse, another recommendation engine, into our system, utilising Redis as our in-memory data store and a fully managed Postgres relational database, both running on AWS

The modularity of the system gives us flexibility in many ways. For example, we can introduce yet another recommendation engine and compare them to decide which one provides the best results. No need to rebuild the entire setup; only one component has to be modified.

Key concepts

Under the hood, Magine Pro uses a recommendation engine that automatically chooses the best machine learning technique for the most accurate results. The model processes real-time clickstream data from the platform, capturing diverse user behaviours. The following recommendation types are supported:

- Most popular

- Latest releases

- Because you watched X

- More like X

- Top picks for you

Magine Pro’s recommendation system includes a vast selection of diverse recommendation types which can be customised to align with your business requirements.

Recommendation algorithms

There are many algorithms that can be used in recommendation engines, let’s look closer at the most popular ones. Recommendation algorithms are categorised into non-personalised algorithms that provide identical content to all users, and personalised ones that recommend unique content to each user based on their clickstream data.

Latest Items algorithm shows the latest items to users according to timestamps, which allows a new item to be exposed to users in time.

Popular Items algorithm counts positive feedback within the specified window interval and chooses the most liked.

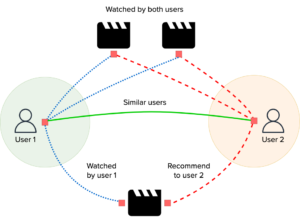

Item-based and user-based collaborative filtering algorithm discovers the similarities in the user’s past behaviour and makes predictions for the user based on a similar preference with other users.

It uses a simple yet effective approach – people often get the most helpful recommendation from people with similar tastes.

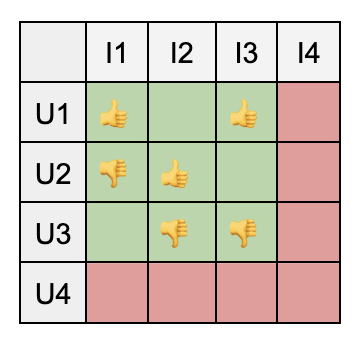

Matrix factorization algorithm identifies the relationship between items and users and returns recommended results.

Recommendation system by Magine Pro offers all these options, utilising a hybrid approach that incorporates a diverse range of rating and sorting algorithms.

The Cold Start Problem

Cold start problem refers to a situation where recommendation engines cannot provide optimal results due to suboptimal conditions, similar to a car engine having difficulty starting in cold weather. The two categories of cold start are item cold start and user cold start.

Cold start problem:

What to recommend to a new user U4?

Who to show the new movie I4?

Collaborative filtering algorithms can find items to recommend when the number of user actions on an item increases. However, they fall short in case of new users or items since the model cannot predict anything for them.

Our recommendation service provides suggestions of the most popular and latest items to new users to solve the cold start problem.

Evaluation

Model evaluation is an essential part of the work on recommendation engines and refers to estimating the performance of a recommender system.

The system automatically reserves a small part of the feedback to make an evaluation of it. The aim is to maximise the probability that the user likes recommended items so the positive feedback rate can be used as a metric.

In the recommendation engine, we use standard ML evaluation metrics to evaluate ratings and predictions: precision, area under curve (AUC), and recall, where 0 means the worst and 1 means we have the best-performing ML model.

As you can see on a screenshot from the recommendation engine dashboard, our model demonstrates impressive performance.

What’s next?

Currently, our team is going through the final steps of feature development, evaluating results to ensure that the system we have built is resilient, highly available, and provides the best possible results.

We are also looking into the potential of integrating a content promotion feature for our partners.

Furthermore, we have long-term plans, such as integrating another recommendation engine into our modular system and creating a scalable testing solution to select the most appropriate model that meets our partners’ needs.

Conclusion

This post has highlighted the significance of recommendation systems in OTT streaming services, explored various algorithms, and delved into the details of recommendation engines. Magine Pro is soon launching a new recommendation service that serves as an example to highlight common issues encountered in creating such services and to introduce frequently used performance metrics.

Don’t forget to subscribe to the Magine Pro e-newsletter to stay up-to-date with our latest news, partners, and products, and to find out which industry events we’ll be attending next.